DIREC-projekt

Low-code programming of spatial contexts for logistic tasks in mobile robotics

Resumé

Lavvolumenproduktion udgør en stor del af den danske produktionsindustri. Et udækket behov i denne industri er fleksibilitet og tilpasningsevne i fremstillingsprocesser. Eksisterende løsninger til automatisering af industrielle logistikopgaver omfatter kombinationer af automatiseret opbevaring, transportbånd og mobile robotter med specielle læsse- og lossepladser.

Disse løsninger kræver imidlertid store investeringer og er ikke omkostningseffektive til lavvolumenproduktion, desuden er lavvolumenproduktion ofte arbejdskrævende.

Sammen med industrielle partnere vil dette projekt undersøge produktionsscenarier, hvor en maskine kan betjenes af utrænet personale ved at bruge lavkodeudvikling til adaptiv og rekonfigurerbar robotprogrammering af logistiske opgaver.

Projektperiode: 2022-2025

Budget: DKK 7,15 million

An unmet need in industry is flexibility and adaptability of manufacturing processes in low-volume production. Low-volume production represents a large share of the Danish manufacturing industry. Existing solutions for automating industrial logistics tasks include combinations of automated storage, conveyor belts, and mobile robots with special loading and unloading docks. However, these solutions require major investments and are not cost efficient for low-volume production.

Therefore, low-volume production is today labor intensive, as automation technology and software are not yet cost effective for such production scenarios where a machine can be operated by untrained personnel. The need for flexibility, ease of programming, and fast adaptability of manufacturing processes is recognized in both Europe and USA. EuRobotics highlights the need for systems that can be easily re-programmed without the use of skilled system configuration personnel. Furthermore, the American roadmap for robotics highlights adaptable and reconfigurable assembly and manipulation as an important capability for manufacturing.

The company Enabled Robotics (ER) aims to provide easy programming as an integral part of their products. Their mobile manipulator ER-FLEX consists of a robot arm and a mobile platform. The ER-FLEX mobile collaborative robot provides an opportunity to automate logistic tasks in low-volume production. This includes manipulation of objects in production in a less invasive and more cost-efficient way, reusing existing machinery and traditional storage racks. However, this setting also challenges the robots due to the variability in rack locations, shelf locations, box types, object types, and drop off points.

Today the ER-FLEX can be programmed by means of block-based features, which can be configured to high-level robot behaviors. While this approach offers an easier programming experience, the operator must still have a good knowledge of robotics and programming to define the desired behavior. In order to enable the product to be accessible to a wider audience of users in low-volume production companies, robot behavior programming has to be defined in a simpler and intuitive manner. In addition, a solution is needed that address the variability in a time-efficient and adaptive way to program the 3D spatial context.

Low-code software development is an emerging research topic in software engineering. Research in this area has investigated the development of software platforms that allow non-technical people to develop fully functional application software without having to make use of a general-purpose programming language. The scope of most low-code development platforms, however, has been limited to create software-only solutions for business processes automation of low-to-moderate complexity.

Programming of robot tasks still relies on dedicated personnel with special training. In recent years, the emergence of digital twins, block-based programming languages, and collaborative robots that can be programmed by demonstration, has made a breakthrough in this field. However, existing solutions still lack the ability to address variability for programming logistics and manipulation tasks in an everchanging environment.

Current low-code development platforms do not support robotic systems. The extensive use of hardware components and sensorial data in robotics makes it challenging to translate low-level manipulations into a high-level language that is understandable for non-programmers. In this project we will tackle this by constraining the problem focusing on the spatial dimension and by using machine learning for adaptability. Therefore, the first research question we want to investigate in this project is whether and how the low-code development paradigm can support robot programming of spatial logistic task in indoor environments. The second research question will address how to apply ML-based methods for remapping between high-level instructions and the physical world to derive and execute new task-specific robot manipulation and logistic actions.

Therefore, the overall aim of this project is to investigate the use of low-code development for adaptive and re-configurable robot programming of logistic tasks. Through a case study proposed by ER, the project builds on SDU’s previous work on domain-specific languages (DSLs) to propose a solution for high-level programming of the 3D spatial context in natural language and work on using machine learning for adaptable programming of robotic skills. RUC will participate in the project with interaction competences to optimize the usability of the approach.

Our research methodology to solve this problem is oriented towards design science, which provides a concrete framework for dynamic validation in an industrial setting. For the problem investigation, we are planning a systematic literature review around existing solutions to address the issues of 3D space mapping and variability of logistic tasks. For the design and implementation, we will first address the requirement of building a spatial representation of the task conditions and the environment using external sensors, which will give us a map for deploying the ER platform. Furthermore, to minimizing the input that the users need to provide to link the programming parameters to the physical world we will investigate and apply sensor-based user interface technologies and machine learning. The designed solutions will be combined into the low-code development platform that will allow for the high-level robot programming.

Finally, for validation the resultant low-code development platform will be tested for logistics-manipulation tasks with the industry partner Enabled Robotics, both at a mockup test setup which will be established in the SDU I4.0 lab and at a customer site with increasing difficulty in terms of variability.

Value creation

Making it easier to program robotic solutions enables both new users of the technology and new use cases. This contributes to the DIREC’s long-term goal of building up research capacity as this project focuses on building the competences necessary to address challenges within software engineering, cyber-physical systems (robotics), interaction design, and machine learning.

Scientific value

The project’s scientific value is to develop new methods and techniques for low-code programming of robotic systems with novel user interface technologies and machine learning approaches to address variability. This addresses the lack of approaches for low-code development of robotic skills for logistic tasks. We expect to publish at least four high-quality research articles and to demonstrate the potential of the developed technologies in concrete real-world applications.

Capacity building

The project will build and strengthen the research capacity in Denmark directly through the education of one PhD candidate, and through the collaboration between researchers, domain experts, and end-users that will lead to R&D growth in the industrial sector. In particular, research competences in the intersection of software engineering and robotics to support the digital foundation for this sector.

Societal and business value

The project will create societal and business value by providing new solutions for programming robotic systems. A 2020 market report predicts that the market for autonomous mobile robots will grow from 310M DKK in 2021 to 3,327M DKK in 2024 with inquiries from segments such as the semiconductor manufacturers, automotive, automotive suppliers, pharma, and manufacturing in general. ER wants to tap into these market opportunities by providing an efficient and flexible solution for internal logistics. ER would like to position its solution with benefits such as making logistics smoother and programmable by a wide customer base while alleviating problems with shortage of labor. This project enables ER to improve their product in regard to key parameters. The project will provide significant societal value and directly contribute to SDGs 9 (Build resilient infrastructure, promote inclusive and sustainable industrialization, and foster innovation).

Værdi

Projektet vil udgøre et stærkt bidrag til det digitale fundament for robotteknologi baseret på softwarekompetencer og bidrage til Danmarks position som digital frontløber på dette område.

Deltagere

Project Manager

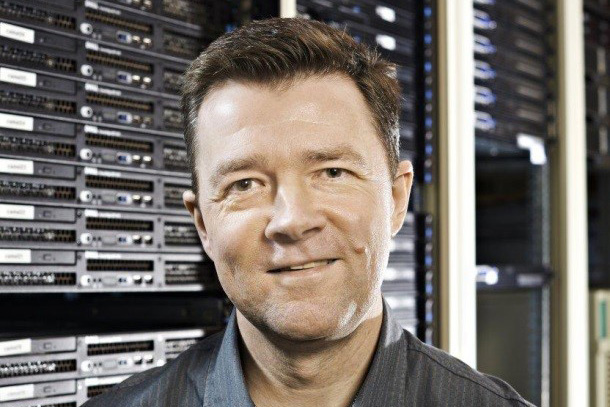

Thiago Rocha Silva

Associate Professor

University of Southern Denmark

Maersk Mc-Kinney Moller Institute

E: trsi@mmmi.sdu.dk

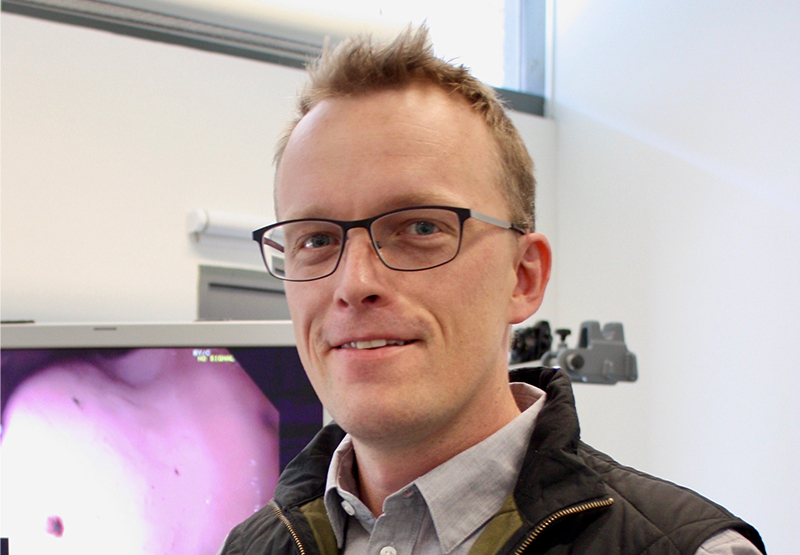

Aljaz Kramberger

Associate Professor

University of Southern Denmark

Maersk Mc-Kinney Moller Institute

Mikkel Baun Kjærgaard

Professor

University of Southern Denmark

Maersk Mc-Kinney Moller Institute

Mads Hobye

Associate Professor

Roskilde University

Department of People and Technology

Lars Peter Ellekilde

Chief Executive Officer

Enabled Robotics ApS

Anahide Silahli

PhD

University of Southern Denmark

Maersk Mc-Kinney Moller Institute

Partnere