DIREC-projekt

Automatic Tuning of Spin-qubit Arrays

Resumé

Spin-qubit quantum-dot arrays er en af de mest lovende kandidater til universel quantum computing. Men med størrelsen af arrays er der opstået en flaskehals: At justere de mange kontrolparametre for et array i hånden er tidskrævende og meget dyrt. Den begyndende spin-qubit-industri har brug for en platform af algoritmer, der kan finjusteres til specifik sensing-hardware, og som tillader koldstart-tuning af en enhed. En sådan platform skal omfatte effektive, skalerbare og robuste algoritmer mod almindelige problemer i fremstillede enheder. Det nuværende landskab af automatiske tuningalgoritmer opfylder ikke disse krav Dette projekt har til formål at overvinde de største forhindringer i udviklingen af algoritmerne.

Projektperiode: 2022-2025

Spin-qubit quantum-dot arrays are one of the most promising candidates for universal quantum computing. While manufacturing even single dots used to be a challenge, nowadays multi-dot arrays are becoming the norm. However, with the size of the arrays, a new bottleneck emerged: tuning the many control parameters of an array by hand is not feasible anymore. This makes R&D of this promising technology difficult: hand tuning by experts becomes harder, as not only the size increases, but also more, and more difficult interactions between the parameters manifest. This process is time-consuming and very expensive. Not only does tuning a device require several steps, each of which can take several days to complete, but also at each step, errors can manifest that can lead to re-tuning of earlier steps or even starting from scratch with a new device. Moreover, devices drift over time and have to be re-tuned to their perfect operation point.

The lack of automatic tuning algorithms that can run on dedicated or embedded hardware is by now one of the biggest factors that hamper the growth of the nascent spin-qubit industry as a whole. What is needed is a platform of algorithms that can be fine-tuned to specific sensing hardware and which allows cold-start tuning of a device: that is, after the device is cooled, tuning it up to a specific operating regime and finding the parameters required to perform specific operations or measurements. Such a platform must include algorithms that are efficient, scalable and robust against common problems in manufactured devices.

The current landscape of automatic tuning algorithms does not fulfill these requirements. Many algorithms are specifically developed for common small device types and use algorithms that do not scale up to more complex devices, or include assumptions on the geometry of the devices that many experimental devices do not fulfill. On the other hand, recent candidates for scalable algorithms are theoretical or developed targeting simplified simulations and lack robustness to the difficulties encountered on real devices.

The research Aims are to overcome the major obstacles in developing the algorithms, which are outlined below:

Aim1: Develop interpretable physics-inspired Machine Learning approaches (WP2)

Machine Learning approaches often rely on flexible black-box models that allow them to solve a task with high precision. However, these models are not interpretable, which makes them unusable for many tasks in physics. Still, interpretable models often lack the flexibility required to solve the task satisfactorily. We will develop physics-inspired models that add additional flexibility to physics-based models in a way that does not interfere with interpretability. A key element to achieve this is to limit the degree of variation the flexible components can add on top of the physical model. We will test the robustness by application on different devices, tuned into regimes that require the additional flexibility.

Aim2: Demonstrate practical scalability of algorithms based on line-scans (WP1-3)

For an algorithm to be useful in practice, it must be scalable to large device sizes. An example for an approach that is not scalable, is the use of 2D raster scans to measure angles and slopes of transitions. This is because the number of required 2D scans rises quickly with the number of parameters of the device. We will instead rely on 1D line-scans and demonstrate that we can still infer the same quantities as 2D scans at a lower measurement time on real devices.

Aim3: Automate discovery of optimal measurement strategies (WP3)

To keep devices at an optimal operating point, they have to be re-tuned with high frequency (e.g., every 100ms). We will develop adaptable active learning and measurement selection strategies to allow monitoring and adaptation of the device parameters while it is running.

State-of-the-art

Currently, the largest manufactured quantum-dot array has 16 qubits in a 4×4 configuration [1]. While promising, it has not been successfully controlled yet. The largest hand-tuned array has 8 qubits [2] in a 2×4 configuration, where the array was tuned to contain a single electron on each dot.

To date most development of automatic tuning algorithms are compatible with arrays of at most two qubits and use deep-learning techniques to approach several steps of the tuning process. These steps involve coarse tuning of a device into the area where it forms quantum dots [3], finding the empty charge state of the device [3], finding a regime with two distinct dots [4] and navigating to a regime with a correct number of charge states [5].

All these techniques are primarily based on 2D raster scans of the charge-sensor response given two control voltages and rely on additional heuristics to allow for efficient tuning.

For techniques that support more than two qubits, there is far less work performed. For the task of finding inter-dot electron transitions [6-7] an algorithm has been demonstrated to work on a silicon device with 3 dots and on an idealized simulated device with up to 16 dots in a 4×4 configuration allowing for automatic labeling of transitions. However, the device used in [6] used a favorable sensor setup that is not applicable to more general devices.

Value Creation

The project is situated at a perfect point in time for realizing its scientific impact via publications of the post doctoral researcher and the development of open source algorithms. We are at a turning point in Danish Quantum efforts: In 2021 the EU funded the QLSI quantum consortium, a 10 year project to develop spin-qubit quantum-dot arrays with strong involvement of Danish collaborators. In 2022, the Novo Nordisk foundation funded the Quantum for Life Center and in 2023 the new Danish NATO center for Quantum Technologies will open at the University of Copenhagen. Moreover, there is a long term pledge from Novo Nordisk foundation to fund development of the first functional quantum computer until 2034. With these long term investments, the scientific outcomes of the project will become available at a time when many other projects are starting and automatic tuning algorithms become mandatory for many of these efforts. To aid these goals, this project will make use of an existing collaboration of QM and KU with the IGNITE EU project that aims to develop a 48 spin-qubit device to verify the usefulness of the developed algorithms.

QM will create value by bundling their hardware solutions together with tuned versions of the software, which allows their customer base to develop and test their devices on a shorter time-scale.

Moreover, this project will foster knowledge transfer between machine-learning and quantum physics in order to continue development of high quality machine-learning approaches. To this end, regular meetings between all participants will be conducted and the work will be presented at physics conferences

Værdi

Projektet skaber værdi ved strategisk at løfte sin position inden for kvanteteknologiudvikling. Dette opnås ved at skabe videnskabelig værdi gennem publikationer og udvikling af open-source algoritmer. Desuden fremmes overførsel af viden mellem maskinlæring og kvantefysik, hvilket i sidste ende muliggør kortere udviklingsproces for hardwareløsninger og bidrager til vækst inden for området gennem tværfagligt samarbejde og formidlingsindsatser.

Deltagere

Project Manager

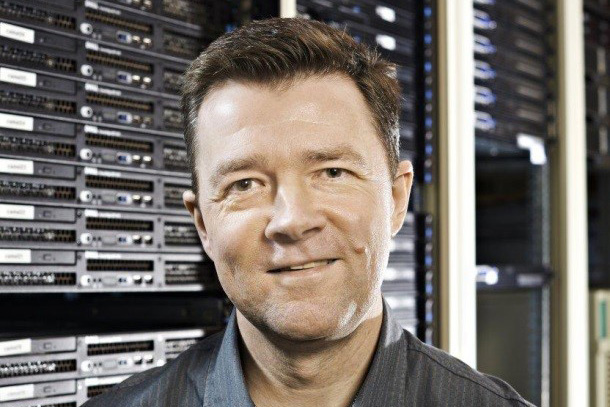

Oswin Krause

Assistant Professor

University of Copenhagen

Department of Computer Science

E: oswin.krause@di.ku.dk

Ferdinand Kuemmeth

Professor

University of Copenhagen

Niels Bohr Institute

Center for Quantum Devices

Anasua Chatterjee

Assistant Professor

University of Copenhagen

Niels Bohr Institute

Center for Quantum Devices

Jonatan Kutchinsky

General Manager

Quantum Machines

Joost van der Heijden

Scientific Business Development Manager

Quantum Machines

Partnere